Artificial intelligence or AI, the phrase/acronym gets banded about an awful lot. Unfortunately, a lot of companies that say they use AI don’t. It’s a marketing gimmick — maybe they are even targeting shareholders, trying to push up their share price. In fact, many experts in AI don’t even like the term, they much prefer to use the words machine and learning, or ML. Delve deeper, and you come across many more terms, neural networks, deep learning, natural language processing and random forests. So what is AI, exactly?

Marc Warner, CEO at Faculty and member of the UK’s AI Council, gave Information Age what he called a colloquial definition of what AI is — one that the layman can use. “Artificial intelligence, or AI, is the field of research that seeks to get computers to do things that are considered intelligent when done by humans or animals.”

There are more technical definitions, of course.

“Intelligence is the property of an agent that allows it to optimise the environment,” said Marc. So artificial intelligence builds upon that.

The trouble is, to a lot of people, the phrase artificial intelligence conjures up images of machines, ruling the world, of a naked Arnold Schwarzenegger coming back from the future to stop Sarah Connor.

The reality is very far from this vision of science fiction.

Which brings us to the reason many experts prefer the term machine learning to artificial intelligence.

What is the difference between machine learning and artificial intelligence?

Marc Warner has a nice explanation — that helps clarify the difference between AI and machine learning.

Up to the late 1990s, from say 1955 to 1995 “the majority of researchers thought that the way you’re going to be able to achieve intelligent performance and tasks was to create a load of rules.”

So for example, ‘this is a cup, this is a table,’. The trouble is, it is extremely difficult to define exactly what a cup or table is.

Or take another example, Falon Fatemi, founder of Node, draws a parallel with a raccoon. We all know, intuitively what a raccoon is. When we define a raccoon to children, we don’t outline a set of rules, instead we might show them a picture, or better still a video — their brain does the rest.

Marc Warner said that getting computers to recognise a cup proved to be an almost impossible challenge — what they called the ‘grounding problem’. It didn’t really get solved until machine learning, “which was basically when they, said, ‘screw it, we are not going to put in explicit rules’. We’re just going to show cups and 1000 saucers and 1000, bottles, and let it work it out itself.”

The idea then is to use machine learning techniques such that an AI algorithm that can identify what a raccoon is from data — rather than a set of rules. Well, we shall be returning to that shortly, because actually, for an algorithm to do something as simple — simple from a human point of view — as identifying a raccoon, requires some pretty advanced stuff.

The form of AI that used rules describes the Deep Blue IBM computer that famously defeated Gary Kasparov at chess.

Although, intriguingly, Kasparov argued that there was nothing intelligent about Deep Blue — “it is as intelligent as an alarm clock,” he claimed.

Kasparov and AI: the gulf between perception and reality

But then Kasparov went on to argue that there is nothing especially intelligent about being able to play chess — humble words from one of the greatest chess players ever. But maybe there is something quite profound in those words — things only seem to require intelligence until we can work out how to do them.

Machine learning, by contrast to the rules method of applying artificial intelligence, entails training an algorithm on data — that way it learns.

The key here is the quality of data.

This also opens up the can of worms that is bias and machine learning, but that is a discussion for another day.

Ethics of AI, the machine-human augmentation and why a Microsoft data scientist is optimistic about our technology future

Fighting AI bias and where it comes from

So machine learning, then, is just one application of AI, but it happens to be just about the only version that has any real relevance today.

Machine learning is proving to be invaluable in areas such as marketing, health care and autonomous cars.

Neural networks and deep learning

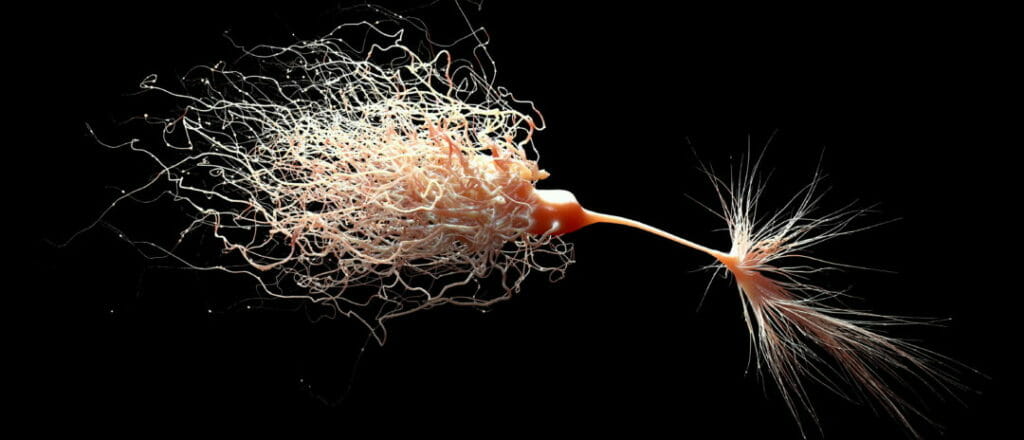

If machine learning is an aspect of artificial intelligence, then deep learning is an aspect of machine learning — furthermore, it is a form of machine learning that applies neural networks.

The idea behind neural networks is to apply a way of learning that mirrors how the human brain works. The human brain is made up of neurons, and these neurons connect with each other via chemical reactions forming synapses.

Marc Warner neural networks are biologically inspired

Deep learning works via layers — layers of artificial ‘neurons’ with each layer responsible for a certain task.

There is one big difference with the human brain and that relates to scale. There are in excess of 100 billion neurons in a human brain, and there are trillions of synapses. Neural networks are not even at a tiny fraction of that scale.

Furthermore, the strength of synapses can vary enormously — quite different from digital technology where there is either a link between neurons, or no link. This effectively provides the human brain with perhaps an infinite number of potential links between neurons.

So neural networks are not at that level and the analogy with the brain, even a scaled down version, is far from precise.

Artificial intelligence networks and the future of deep learning

Marc Warner says that neural networks are biologically inspired — and although neural networks are nowhere near the complexity of the brain, he says that we do have a pretty good understanding of how neurons can process images — in that one area, we may be getting close.

Neural networks themselves really started to emerge as a viable application in AI when researchers figured out how to use GPUs — graphics processor units — as the individual units, or nodes, or neurons in a neural network.

So actually, it was a form of convergence — it turned out that technology developed for one purpose — graphics processing — had applications in artificial intelligence.

Falon Fatemi: Unsupervised models can essentially be trained on the knowledge that exists on the web

The late 2010s saw a number of innovations. 2009 saw what has become known as the big bang of neural networks, when Nvidia, a hardware company that originally specialised in technology for video games, trained its GPUs in neural networks. At around that time, Andrew Ng, worked out that by using GPUs in a neural network could increase the speed of deep learning algorithms 1,000 fold.

Describing the latest in neural networks, Marc Warner puts it this way: “they’re enormous beasts… they have say 150 different pieces, and each piece contains multiple layers.”

Each layer might then be given a specific task, typically they start by identifying edges — one might identify vertical edges, another horizontal and another curves. From this, another layer can pull this information together to identify say a face — or a racoon.

Falon Fatemi takes this a step further, talking about what Node calls unsupervised deep learning — “Unsupervised models can essentially be trained on the knowledge that exists on the web,” she says. “That we could never as humans digest and read. There’s more information created in a single day than we could possibly absorb in a lifetime, but a machine can absolutely digest it, learn from it, understand it, and dynamically build knowledge of the world that we can then leverage.”

Related: How to put machine learning models into production

So deep learning is a subset of machine learning that applies neural networks, and machine learning is a subset of artificial intelligence.

Random Forests

Random forests are another subset of machine learning.

Random Forests build upon decision trees. A decision tree is a simple method of making a decision which we all intuitively understand.

‘Shall I play tennis today?’

‘Do I have the time?’

‘If yes, is the weather appropriate?’

If yes, can I get a tennis court?’

Turn these decisions into a diagram and you see branches — bit like a tree, although the word ‘bit’ seems rather appropriate.

Random Forests and what do they mean for you?

Random Forests apply this at scale, applying the concept of wisdom of crowds.

Supposing you play a game of chance in which the odds are 60/40 in favour of you wining. Play the game once and there is a 40% chance you will lose. Play the game 100 times and it is highly likely you will win 60 times. And also win overall.

But then you can go to another level. If you divide playing the game 100 times into say a set, and you play say 100 sets, then you are likely to win the vast majority of those sets.

Random Forests can be applied to machine learning — for example, with autonomous cars, what decision process should the algorithm apply if it is about to crash in order to minimise damage, or risk of injury?

For example, this paper looks at applying random forests to classify images, while this paper looks at an algorithm for developing driving skills using random forests.

Some other terms

We will be looking at natural language processing and Monte Carlo simulations in articles to follow.

Natural language processing gives a voice to digital processes

Related articles

Artificial intelligence and the Pharma industry. What should CIOs and CCIOS be doing?

Explainable AI : The margins of accountability

AI, machine learning and Python: Let’s see how far they can go

AI as a service, democratising AI, seeing into the future, and Artificial Intuition