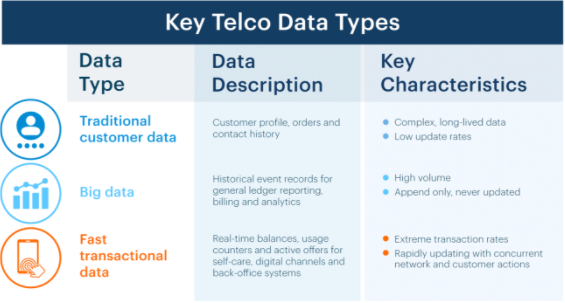

Telco’s rely heavily on databases for many core business functions. In exploring the journey through order-to-cash, people would encounter 3 data sets with diverse characteristics that inherently dictate the appropriate database technology:

The first set, traditional customer data, is accessed primarily by customer care agents and other customer facing channels to process customer orders and requests using a classic model that is well established across many industries.

>See also: The rise and evolution of the vertical database

Salesforce.com and others provide robust solutions based on relational databases that are well suited to the task of retrieving and editing a complex dataset for each customer’s history that could span many years. Relational databases were really the first mainstream technologies on the scene and are still highly applicable in this case.

Big data is the second data set. For financial and analytics applications accessing Telco event records, however, relational databases have become obsolete. In the flip phone era, the average subscriber created 10 – 15 event records per day – 1 for each phone call.

Ten years into the smartphone era, many subscribers are creating hundreds to thousands of usage records per day, since the usage is no longer tied to performing one task at a time (i.e. making a phone call).

In addition, real-time analytics and campaign management are becoming table stakes for digital interactions and upselling, so this huge volume of data must be searched and analysed with very low latency.

As the event records are immutable, full transactional semantics are not critical so the recent wave of web-scale NoSQL databases, such as MongoDB, have shown key advantages for this data category. They offer extremely performant and cost-effective solutions for storing vast volumes of immutable data that can be used for post event processing.

>See also: In-memory databases are essential for business

The third set is fast transactional data. These are the network and customer events as they are occurring and processed in real-time. This is where things really start to get interesting as it is the engine room for any Telco that wants to become a digital service provider (DSP).

Keeping up with the volume of real-time transactions used to be straightforward. Post-paid subscribers that are billed monthly were all managed by batch systems. Early pre-paid systems that charge customers in advance, while real-time, were handling 10 – 15 calls per day per subscriber and largely just counting minutes – the same business logic that runs in a parking meter.

The world today is fundamentally different – all digital services are by their nature real-time and on-demand. People use their phones more than 150 times per day, creating hundreds to thousands of unique transactions per smartphone, all of which must be processed immediately and accurately as they impact the consumer’s balances, data package, and entitlements.

Payment methods are also converging, with customers combining prepay with post-paid offers but expecting the same precise, instant experience which previously was only available with prepaid. The tariff plans have become far more complex and layered with content entitlements and other promotions.

There is also greater demand for self-care journeys and on-the-fly service personalisation, all delivered through modern digital channels that rely on instant interactions.

>See also: Graphs and smart cities: a neat combination

If you want to keep your customer balances correct, give instant access to services and ensure customers pay correctly, you need a database that can keep up with the transaction and update volumes under every circumstance.

You can’t let customers spend the same dollar twice, it’s bad for business, so you need to maintain accuracy right down to the cent, every second of every day. When you add in ‘always on’ smartphones, millions of customers expecting instant gratification, and the ability for devices to share pools of data, you can see why Telco’s need one hell of a database.

To maintain accurate real-time data in this new world of digital chaos, you need a database that’s both ACID compliant1 (always correct!) and able to handle extreme transaction volumes with very low latency.

The unique challenge facing databases that support Telco operations relates to the high write intensity of the data. Compounding this challenge are the unpredictable, highly concurrent actions happening simultaneously from network systems and customer activity, creating extremely high volume, high complexity updates to the database.

The traditional approach of relational databases to achieve ACID compliance is to lock the data used within each transaction, thus serialising any transactions that would have collided (overlapped) and caused a data integrity violation.

>See also: The real damage of a ransomware attack is felt in the downtime

This approach works well for ensuring data integrity in a sprawling, low velocity data set like traditional customer data, but severely impacts performance and latency for high volume, high complexity real-time systems.

In-memory databases like TimesTen provide a small amount of relief; however, they are still built with the same locking principles, so they have no hope of keeping up with the flood of on-demand digital transactions.

Web-scale technology, such as Cassandra, is also ill-suited to transaction processing as it relaxes certain ACID compliance criteria to handle the volume; therefore it cannot guarantee to deliver the right answer when a customer checks their balance or when Telcos must meet regulatory requirements by notifying customers when they are nearing the end of a data package.

Recently, some Telco vendors have offered ACID compliancy layered above web-scale technology databases. However, if you look under the covers, this approach imposes the traditional locking model and therefore destroys the “web-scale” aspects of the database underneath.

Michael Stonebraker, a pioneer in the world of database technology, recognised these limitations while building a massively scalable stock trading platform. His solution was to build VoltDB with a radical new approach to ACID compliance.

>See also: Financial services and Neo4j: anti-money laundering

Since each stock trade impacts a very small set of data, and the data needed to trade Stock A vs. Stock B is not overlapping, he could partition the database into many small sets of independent data and run transactions within any one data set in a single processing thread.

This effectively serialises all trades to the same stock, but allows trades for different stocks to run at full speed in parallel with no locking. This approach works brilliantly for simple transactions across isolated data sets, it nonetheless fails quickly if applied to the complex, overlapping data sets required for modern Charging and Policy logic, especially with the explosion of shared plans.

In short, the Digital Telco environment presents unique challenges that are not addressed by any of these database technologies, and their needs will only be magnified as mobile devices, and the amount of data they consume, continues to grow at breakneck pace.

In my final blog in this series I will talk about alternative approaches to handling high volume, high complexity transactional data and how Telcos can leverage new database technologies to address the complexity of their IT environment.

¹ ACID compliance (Atomicity, Consistency, Isolation, Durability) is a set of characteristics of some databases that guarantees transactions run in parallel will result in the same end state as if the transactions were run serially. Financial systems such as Charging & Policy must provide a precise, always correct answer and therefore require ACID compliance. Web-scale database technologies, while highly scalable, do not provide ACID compliance.

Sourced from Dave Labuda, founder, CEO and CTO of MATRIXX Software