In May 1997, a smart new computer built by IBM, called ‘Deep Blue’, trounced the world chess master, Gary Kasparov.

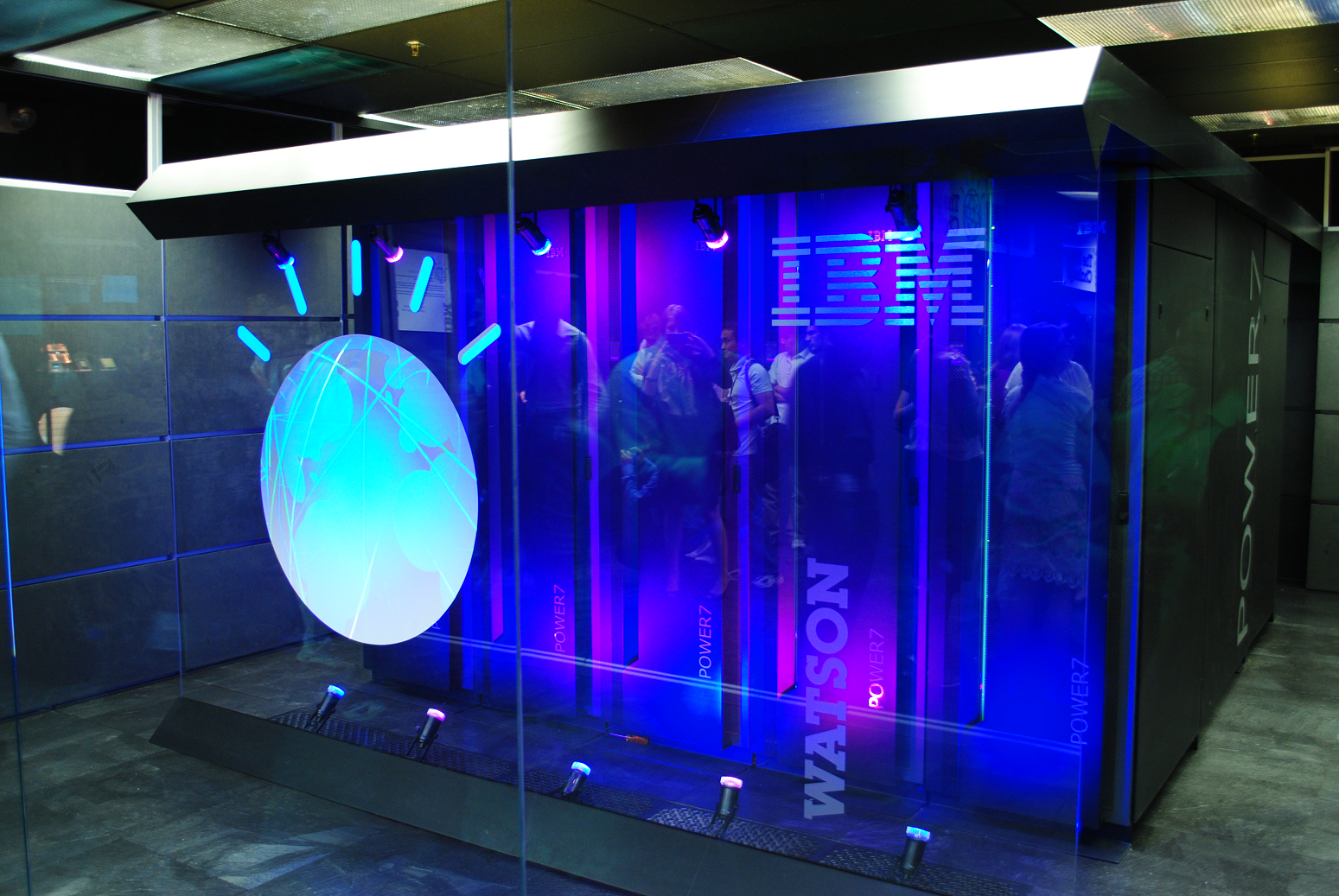

In February 2011, IBM did it again: its latest intelligent computer, dubbed Watson after IBM’s founder, bested the two reigning champions of the popular quiz game, Jeopardy! This was a showcase moment for IT, ushering in a new era of computing: machines that can think and communicate in a human-like way.

Needless to say, Watson did not appear fully formed from the drawing tables of IBM. Bringing it into being was the result of many decades of industry research in artificial intelligence (AI), the internet, search engine technology, robotics, natural language QA, analytics, and a host of other innovations.

During the contest, Watson – which was not connected to the Internet during the show – sifted, sorted, compared, matched, analysed and crunched millions of pages of information on dozens of possible topics, which had been fed in beforehand.

It then rated possible answers according to degrees of confidence, selected the best answer, and issued them in a voice that was hard to distinguish from the two human panellists. It accomplished all of this in under three seconds.

Getting smarter together

Clearly Watson wasn’t just designed just for game shows. The goal was to create a new generation of ‘cognitive assistants’ that can augment human capabilities not only through its spectacular data crunching capabilities, but by being able to understand and analyse complex real-world scenarios, hypothesize, draw conclusions and advise on outcomes.

Through this man-machine interaction, a virtuous circle is thus be established wherein both partners experience a growth of intelligence that benefits commerce, civilisation and the very future of our planet.

It is reassuring to think that in this partnership humans rein supreme – at least for the foreseeable future. According to Gartner’s August 2013 report, ‘Emerging Technologies Hype Cycle for 2013: Redefining the Relationship’, the main benefit of having machines working alongside humans is ability to access the best of both worlds: productivity and speed from machines, and emotional intelligence and the ability to handle the unknown from humans.

“Cognitive computing is about man and machine collaborating to both become smarter,” says Jackie Fenn, VP, Gartner fellow and the report’s co-author. “However, at the end of the day machines are essentially decision-making tools for humans.

“If you think of the multi-dimensional complexities, computations, coordination, sub-conscious and instinctual responses involved in a simple action like crossing a busy street whilst talking to a friend, this involves human specialities not easily replicated.”

>See also: From mediocre chess player to Jeopardy! master

Interacting with humans

Essentially, Watson won Jeopardy! by doing what computers do best: crunching vast amounts of information at great speed, aided by sophisticated algorithms to calibrate the possible answers on a scale of ‘most-to-least confident’ and then select the winner.

According to Ton Engbersen, big data scientist, IBM Research, the most challenging part of Watson’s development was its natural language question and answer (NLQA) capabilities.

This component is crucial if computers are to step out of the IT department and take their place alongside the doctors, teachers, engineers, lawyers, City traders, contact centre agents, city planners, police, meteorologists, astronomers, environmentalists and myriad other arenas to which Watson’s descendants will one day be applied.

“Right now the science of cognitive computing is in the formative stages,” he says. “To become machines that can learn, computers must be able to process sensory as well as transactional input, handle uncertainty, draw inferences from their experience, modify conclusions according to feedback and interact with people in a natural, human-like way.” In other words, they need to be able to learn the same way humans do.

They will need to interface in a way that is natural to how people live and work, rather than, as has traditionally been the case, humans adapting to suit technology. With the advent of smartphone interfaces and intuitive tools like Apple’s Siri, this human-centric transition is gaining momentum rapidly.

Watson at work

What of Watson in the workplace? Zachary Lemnios, vice president of strategy for IBM Research, says: "Watson demonstrates that cognitive computing is real and delivering value today. It’s already starting to transform the ways clients navigate big data and is creating new insights in healthcare.”

Since Jeopardy!, Watson has been working with oncologists at the Memorial Sloan–Kettering Cancer Center in New York to make diagnoses, map care pathways and train students.

This application plays to Watson’s strength, helping doctors stay up to date with streams of information on latest trends, best practices and breakthroughs, and crunching hundreds of thousands of archived case histories to spot patterns, anomalies and indicators for best treatment.

Watson is also being piloted in call centres, analysing customer data and providing agents with virtual real-time responses, with an automated version of Watson perhaps replacing CSRs at some point.

Other applications being explored are in financial services, civic management and meteorology – all of which deal with high volumes of fast-changing information and optional scenarios.

Fenn sees smart machines as moving in two directions: on the one hand working with humans as expert assistants, and on the other replacing us. Robots have already taken over the assembly line and driverless cars and pilotless planes are just a matter of time.

Automated, micro-machines called ‘smartdust’, RiFD sensors, CCTV cameras and a plethora of other smart devices are monitoring and controlling everything from the temperature in your living room to lifestyle choices – issuing an alert if, for example, we enter a toxic environment or have eaten something unhealthy. Smart sensors are even being used in neonatal wards, attached to premature babies to monitor vital signs.

>See also: IBM to offer Watson as development platform

Going to the mountain

All of the structured and unstructured data that is being generated in this mushrooming ‘Internet of Things’ (or as Fenn calls it, ‘the raw data of life’) is putting a strain on today’s computer processing capabilities.

It is estimated that we humans are currently generating in excess of 3 exobytes (a quintillion bytes) of data every day. Viewed as a natural resource, this stream of information provides a rich source of intelligence for smart machines, but harvesting it requires a new approach to technology.

How do we sift through all the noise to find the nuggets that count? And how do we do it in a way that is timely and doesn’t require vast amounts of energy and expense?

Engbersen thinks we need to take Mohammed to the mountain, so to speak. “At the moment, data are fetched from here, there and everywhere – from databases, the cloud, networks and wherever else they reside around the world – and then brought to the processing centre. It is faster and much more efficient to take the processing functions to the data,” he says.

Enhanced DRAM technology and in-memory analytics take this approach, enabling far greater quantities of information to be stored and processed on the same chip. But what we really need is an entirely new paradigm for computing.

The next wave of software smarts

At the software end the challenges are equally profound. One of the hurdles for computers is the ability to deal with uncertainty. In order to learn, adapt and function in the real world, cognitive systems need to be able to handle probabilities, draw inferences and harvest new insights from the facts available.

Towards this end, strides are being made in the area of advanced algorithms and analytics. Stochastic optimisation works with sequences of random variables and probability theory. Predictive analytics evaluates current and historical facts to make predictions about future events and pervasive analytics provides tools to handle unstructured data (sensors, video, images etc.).

To cope with high volumes of information, contextual analytics supports data matching and pattern recognition, and streams analytics enables computers to take in, analyse and correlate information from thousands of real-time sources at speeds of millions of events, messages or transactions per second.

In order to power assist the development of cognitive computing, IBM has just announced its collaboration with four leading universities: MIT, Carnegie-Mellon, New York University and Rensselaer Polytechnic.

Together they plan to focus on a number of key areas including processing power, data availability, algorithmic techniques, AI, natural interaction and automated pattern recognition.

But all of this is still just the tip of the iceberg, says Lemnios: “Cognitive systems will require innovation breakthroughs at every layer of information technology, starting with nanotechnology and progressing through computing systems design, information management programming and machine learning, and, finally the interfaces between machines and humans.”

When can we expect the future to arrive? In 10 years we may look back at Watson and view its accomplishments as mere baby steps. Will that famously renegade computer HAL (a play on the name IBM) from ‘2001: A Space Odyssey’ ever take over the world?

“Who knows where we will be in 100 years,” answers Engbersen, “but for now we have a lot of work to do in developing cognitive systems.”

Perhaps the best way to think about tomorrow’s army of cognitive assistants is as co-creators in the journey towards a better world: working with humans to penetrate space, feed people, save the planet from climate catastrophe and further the cause of peace, prosperity and the growth of intelligence for the enhancement of all.