In today’s world, the ability to examine large amounts of data to uncover hidden patterns, correlations and other insights is relatively effortless. For those at the forefront, the use of machine learning and a specific subset of AI can analyse more complex data faster. Alternatively, through effective data management principles, many organisations can establish a master flow of its processes to automate. The availability of Apache Hadoop, a free open source software framework that can store large amounts of data and distribute big data fast for any organisation, has provided a platform to do this all with relative ease. But now we are at a point of capturing this data, how are we using it?

With McKinsey’s ‘Analytics comes of Age’ study showing data use has reduced transactional costs for years, the accelerated increase in electronic data has led many organisations to set up wide consumer data lakes, integrated databases and optimised products/services to better meet their users’ expectations. Data sets and sources have now become great unifiers in creating new, cross-sectional competitive dynamics. Regardless of industry or company size, it manages to squeeze into every nook and cranny. It has revolutionised supply chain operations, banking, manufacturing, retail and sales inventory, not to mention generating its own industry sector with venture capital funding of data start-ups reaching over $19bn. There is no doubt that we are in the thick of framing our learning from data – but is this being done ethically or have organisations limited its use to increase their bottom line?

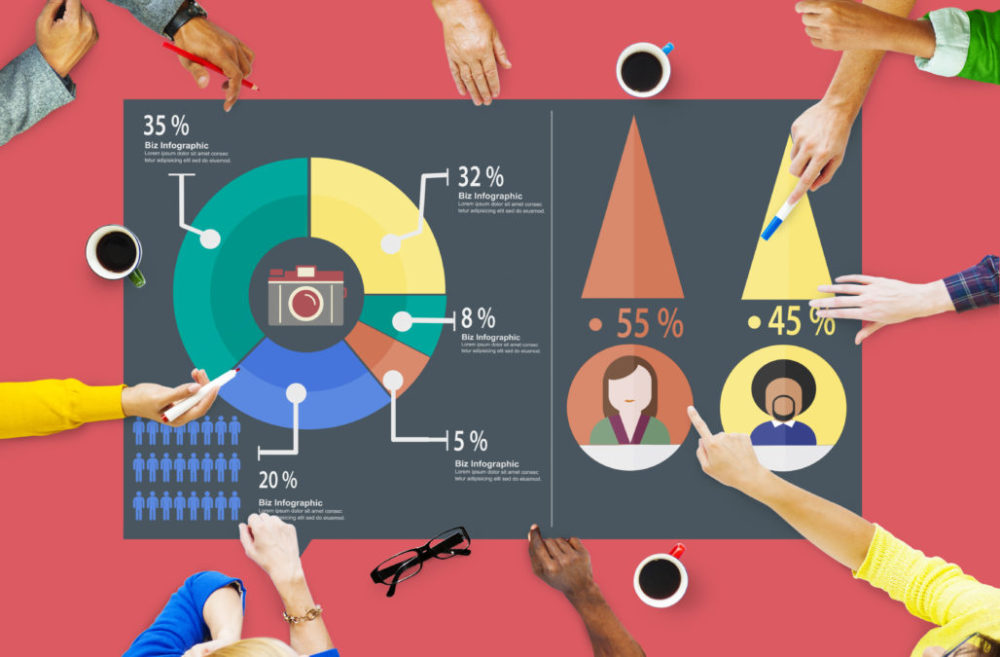

Top five business analytics intelligence trends for 2019

It is widely accepted that learning analytics can influence decision making. Harvard Business Review Analytic Services study as reviewed by SAS shows real-time customer analytics are a strategic priority. Early adopters are already reaping tremendous benefits on the engagement and revenue front – most successfully creating personalised customer experiences at scale. However, it has not thoroughly been explored if information technologies and digital media can systematically distinguish cases of online manipulation. Some researchers suggest that at its core, manipulation is a hidden influence – the covert subversion of another person’s decision-making power. For a number of reasons, data analytics can engage in manipulative practises significantly easier and therefore makes the effects of such practices deeply debilitating. Given that respect for individual autonomy is a bedrock principle of liberal democracy, the threat of online manipulation is a case for grave concern if organisations and its leaders are unaware how embarking on such activities using data can enhance these adverse capacities.

More recently I was chatting with a number of organisations about their newly inspired learning analytics software. At first glance it seemed great. I could use their entirely wireless technology to monitor our physical spaces. A digital thermometer to track room temperature and cameras that claim to track when an individual is engaged, or at their most efficient, with the potential to create a ‘perfect’ environment for users. With the option to control this either myself or through a very comprehensive automated tool. However, I then started to wonder how I would feel if I knew someone was controlling the room temperature of my office and how this could have the ability to affect my mood – too hot and would I become combative and angry, too cold I’d be more acquiescent? I then started to question if any piece of technology could really capture enough data about such a variety of people to find a balance – it’s not just me in a room, would my colleagues and I all be happy despite arguing over the air conditioning now. But more alarmingly if this could be achieved, who takes on this responsibility and who is vetting the process of managing this acute level of detail that could impact human behaviour. I consider myself a professional, but we cannot be naïve enough to think that some would not use this to their own personal advantage. If I can question the ethics over such a trivial example, how will you and your organisations plan and frame their own conversations in the face of such volume and data insight?

Explainable AI : The margins of accountability

Researchers out of Europe are appealing to our ethical conscience as organisations continue to create, use and absorb new data that commands the digital future – “… we are in the middle of a technological upheaval that will transform the way society is organised”. Some software platforms are already moving towards persuasive computing, whereby tailored platforms steer us through entire courses of action, from which corporations earn billions. This trend shifts from programming computers to programming people. We have already seen this become increasingly popular in the world of politics, as big nudging is a sort of digital sceptre that has allowed one to govern the masses efficiently towards healthier or more environmentally friendly behaviour by means of a ‘nudge’. As you continue to build your digital landscape, the need to ensure widespread technologies are compatible with society’s core values, human rights, democratic principles that guarantee the informational self-determination of your stakeholders, is all but critical. This is not for the faint-hearted, are you prepared to spearhead the conversation in your organisation?

Further reading: “Data for Humanity” (see “Big Data for the benefit of society and humanity” for advice on guidance and principles).

Organisations across the globe lack data analytics maturity, says study