In the story of technology, where do you begin? In my new book, co-written with Julien de Salaberry, called Living in the Age of the Jerk, we go back in time, way back. But for the sake of this article, I begin the story of key events in technology with Charles Babbage — who designed the first computer.

I could have begun with the invention of the steam engine, the early innovations of the industrial revolution of the 18th Century such as Arkwright’s Water Frame, or even further to the printing press, the invention of writing, the wheel or even to the point when our early ancestors learned how to make fire.

But in the book, we focus on what we call the fifth industrial revolution, a revolution that will follow on immediately and to an extent overlap with the fourth industrial revolution. The fifth industrial revolution will culminate in the augmentation of humanity, changing us so completely that you could even argue we are no longer the same species.

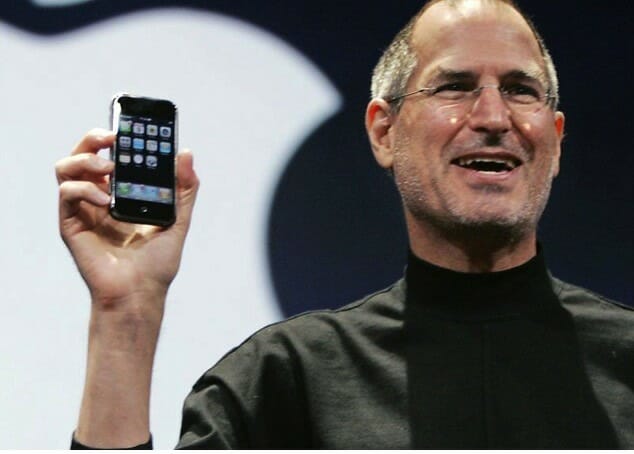

In a way, the iPhone and Android are fifth industrial revolution products because while they have not literally augmented us, for many of us, they are akin to a new appendage. In the very near future will be supported by always there AI assistants, which may even access via our thoughts. Our memories could be enhanced, even our intelligence. We could be physically augmented too, like bionic men and women. If you ever saw the 1970s TV series Six Million Dollar Man, you will know what I mean. Finally, there is our DNA, it won’t be long before even this is changed, literally changing us physically.

A history of AI; key moments in the story of AI

AI is the word of the moment, or is that acronym of the moment? But where did the idea come from? We track key moments, discoveries and ideas in the history of AI

So what are the key events in the story of technology as just told above?

- 1987: the Culture Series of books by Ian M Banks begins with ‘Consider Phlebas’, the books introduce the idea of neural lace, a fictional technology for enhancing the brain with a mesh of artificial neurons creating an interface between machine and brain

- 2003 the Hunan Genome is sequenced at a cost of $2.7 billion.

- 2005: Boyden ES, et al. Nat Neurosci publish a paper showing how a protein in a certain type of algae reacts to light. This was a key moment in the development of optogenetics, technology for turning targeted neurons in the brain on and off, with extraordinary applications.

- 2007: Apple announces the iPhone, and later in the year Google reveals the Android.

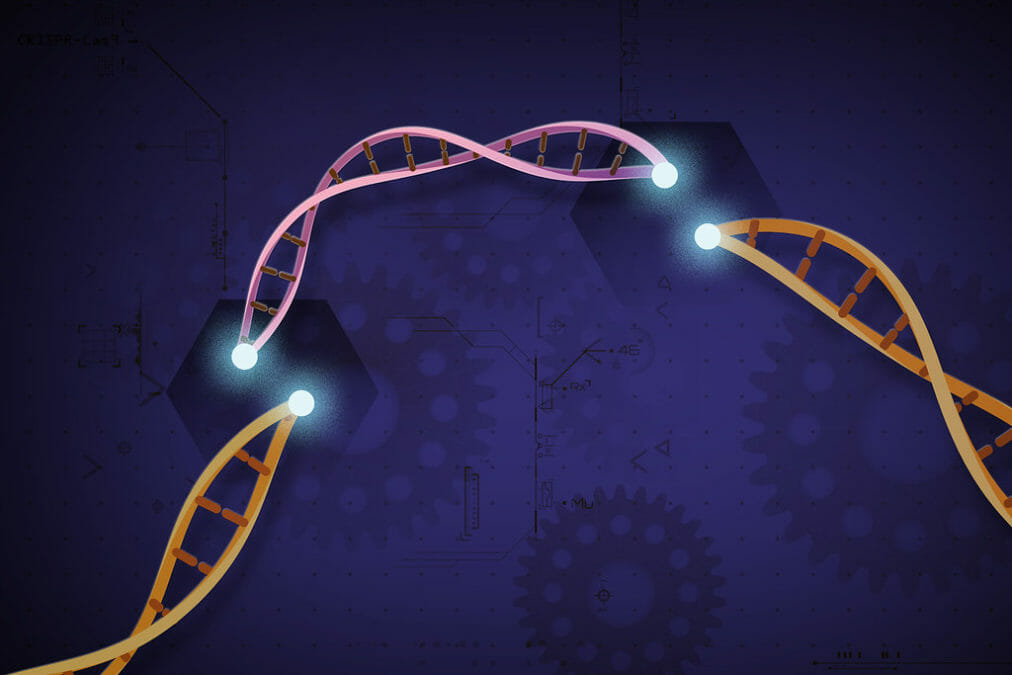

- 2012: Jennifer Doudna, and Emmanuelle Charpentier from the University of California, Berkeley and Umeå University in Umeå, Sweden, publish a paper showing how CRISPR/Cas 9 is used by bacteria to protect themselves from viruses by editing their own DNA.

- August 2013, researchers at the University of Washington claimed to have created the first human to human brain interface, with one individual able to send instructions on playing a simple video game to another individual, on the side of the campus, directly from his brain. Chantel Prat, assistant professor in psychology at the UW’s Institute for Learning & Brain Sciences said: “We plugged a brain into the most complex computer anyone has ever studied, and that is another brain.”

- 2014, researchers “successfully transmitted the words “hola” and “ciao” in a computer-mediated brain-to-brain transmission from a location in India to a location in France.”

- 2017, Facebook reveals work by Regina Dugan to create technology that initially will enable individuals to ‘type by thought’.

- 2007, Nurolink Corporation is founded by Elon Musk. The company’s purpose is to develop implantable brain machine interfaces.

This all sounds very science fiction-like, maybe we need to go back further and look at key moments in the story of technology of a more practical application at the moment.

What is AI? A simple guide to machine learning, neural networks, deep learning and random forests!

Key events in the story of technology: the list

- 1832: Charles Babbage invents the first Computer.

- 1904: John Ambrose Fleming invents the vacuum tube.

- 1936-1938: Konrad Zuse creates the first electro-mechanical binary programmable computer.

- 1936: Alan Turing proposes what has become known as the Turing Machine.

- 1940: Alan Turing’s Bombe is able to decrypt the enigma code.

- 1943: Thomas Watson, president of IBM, states: “I think there is a world market for maybe five computers.”

- 1947: John Bardeen and Walter Brattain, with support from colleague William Shockley, demonstrate the transistor at Bell Laboratories in Murray Hill, New Jersey.

- 1949: Popular Mechanics states: “Where a calculator like ENIAC today is equipped with 18,000 vacuum tubes and weighs 30 tons, computers in the future may have only 1000 vacuum tubes and perhaps weigh only 1½ tons.”

- 1959: Richard Feynman gives a lecture with the title: “There’s plenty of room at the bottom.” Although Feynman’s role in its development may be exaggerated, this is considered by many to be the point when the concept of nanotechnology was first conceived.

- 1965: Gordon Moore, who later went on to co-found Intel, states that the number of the number of transistors on a computer chip doubles roughly every two years, and so Moore’s Law was defined.

- 1966: K.C.Kao & G.A.Hockham publish “Dielectric-fibre surface waveguides for optical frequencies”, proposing optical fibre communication

- 1969: ARPANET funds the first packet-switched message between two computers marking

- the beginning of the internet.

- 1976: Apple 1 released.

- 1980: John Bannister Goodenough invents the cobalt-oxide cathode making the lithium-ion battery that now sits in smart phones and electric cars, possible.

- 1985: Akira Yoshino, enabled industrial-scale production of the lithium-ion battery, representing the birth of the current lithium-ion battery.

- 1981: IBM invents the PC.

- 1889: Sir Tim Berners Lee at CERN proposes HTML, the standard that made the World Wide Web possible.

- 1997: IBM’s Deep Blue defeats Gary Kasparov at chess.

- 2001: The human genome is finally sequenced.

- 2004: Prof Andre Geim and Prof Kostya Novoselo from Manchester University isolate graphene.

- 2005: Boyden ES, et al. Nat Neurosci publish a paper showing how a protein in a certain type of algae reacts to light. See above.

- 2007: Apple releases the iPhone.

- 2011: IBM’s Watson defeats two Jeopardy champions.

- 2012: Jennifer Doudna, and Emmanuelle Charpentier from the University of California, Berkeley and Umeå University in Umeå, Sweden, publish a paper showing how CRISPR/Cas 9 is used by bacteria to protect themselves from viruses by editing their own DNA.

- 2017: Google subsidiary, DeepMind software AlphaGo defeats the world’s best player at the Chinese game of Go, something previously considered impossible for a computer.

Key events in the story of technology: forecasts

Okay, so let’s forward wind. What next? As they say: “It is difficult to make predictions, especially about the future,” Even so, I am having a go. From the perspective of the year 2030, what will be the key events in the story of technology in the 2020s.

- 2022: AI is harnessed in the battle against fake news. This will be the start of a ten-year process.

- 2023: Evidence shows that autonomous cars are marginally safer than cars driven by people.

- 2024: Highly accurate real-time voice translation devices linking earphones via the cloud to AI translation tools become available, meaning that we can talk to anyone on earth. A public debate then ensues – why do we need to learn more than one language if translation tools are so accurate?

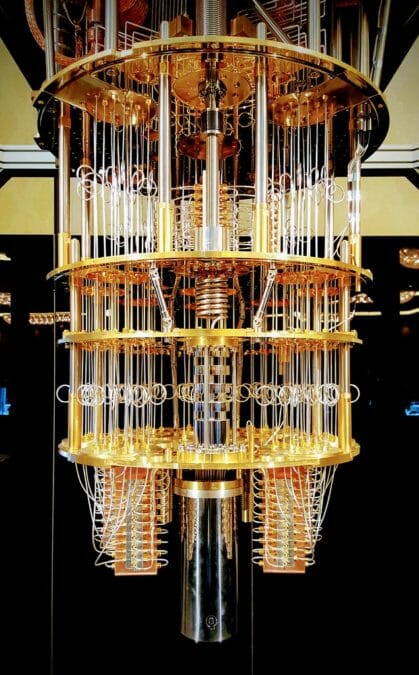

- 2024: Quantum computers begin to emerge as an alternative to conventional computers, for certain tasks and accessed via the cloud. Moore’s Law for Quantum Computers, Rose’s Law applies, to certain types of quantum computers, meaning that the number of qubits in scalable quantum computing architecture double every six to 12 months.

- 2025: The cost of renewable energy and energy storage which had been falling for decades reaches a tipping point, when the economic benefits of renewables over fossil fuels becomes overwhelming. But will this be enough to win the war against climate change? Scientists will say we need to do more. As the evidence for climate change becomes plain for all to see, there will be a backlash against companies and politicians who were seen as denying climate change or who deliberately tried to confuse public understanding.

- 2027: Projected date for automated truck drivers. https://arxiv.org/

abs/1705.08807 - 2030: Autonomous cars become so much safer than conventional cars that nearly all new cars are exclusively autonomous. The cost of insuring cars driven by humans escalates. Rules are introduced to heavily regulate traditional cars, to protect innocent passengers in autonomous cars from human drivers.

Is a robot tax, or even an AI tax, really a good idea?

And what about the 2030s and beyond? what will be the key events in the story of technology in the 2030s, and the years after?

These are just guesses of course. See what you think? (Note some of these predictions come from a recent report When Will AI Exceed Human Performance? Evidence from AI Experts

- 2031: The age of the sharing car economy dawns. It will be called TaaS — transport as a service. With this development, the psychology of driving changes. We will no longer see our car as ‘our pride and joy’ or even an appendage, in which male drivers see their automobile as an extension of their manhood, as a method to attract potential mates, because cars will no longer belong to us. As a consequence, cars will become more functional, and there will be less variety. Since the average car is parked for around 95 per cent of the time, the emergence of the TaaS model will mean consumer demand could be met with between 5 and 20 per cent less cars. This will have a devastating effect on the auto-industry.

- 2031: Emerging markets, for several reasons, but primarily because they will not be hampered by technology legacy, unlike in the West, will begin to emerge as the new economic powerhouses.

- 2031: CRISPR/cas 9 products become commonly available. The DNA of certain food products will be modified as will the DNA of disease-carrying animals such as mosquitos and then in time, human DNA will be modified, at first by treating disease, later to augment us, even to reverse aging. The resulting ethical implications will be hotly debated.

CRISPR/Cas 9, image credit

NIH Image Gallery - 2032: The fake news battle reaches new heights as augmented reality products whisper into our ear, advising us if information we have just heard or read may in fact be fake, or misleading. Although the intention behind this is well meaning, there will be a massive public backlash and claims of state sponsored indoctrination. Some press, which will fall victim to the fake news apps will be among the more vociferous critics.

- 2032 – 2040: Augmented reality begins to transform the way we communicate over distance. Green screens become ubiquitous in restaurants, meeting rooms, offices and even in the bedroom, as we communicate, enjoy simulation of face-face interaction, we can work from home or a local office even though it will feel as if we are working with others in an office many miles away, maybe even thousands of miles away. We will eventually be able to make love, or an approximation, at a distance.

- 2034: The era of Quantum supremacy. For certain applications, such as studying molecules or protein founding, quantum computers will be thousands of times more powerful than conventional computers. But they won’t be able to do everything that conventional computers can do. Instead, we will see convergence: quantum computer, plus conventional computer, and hopefully, plus human proves to be a formidable combination.

- 2035: Neurocomputing reaches a level such that technology is finally applied to augment our own cognitive abilities. Interfaces between brains and computers will emerge at around this time, and before the end of this decade, it will be possible to control our brain augmented devices by thought alone.

- 2035: Climate change will be reaching a level of such threat, that it will be realised that we are reaching a do or die moment, that only global cooperation can save us from ourselves, and only by building carbon capture projects on a massive scale can we stop, and possibly reverse some of the disastrous effects of climate change. Just as technology caused the climate change crisis in the first place, it will be required to come up with a fix. Carbon capture becomes essential.

- 2035: Lab grown meat (from stem cells) competitive in price with conventional meat.

- 2040: Conventional meat, a niche product as lab growth meat proves superior, better for the environment and cheaper.

- 2044: Quantum computers between one million and a trillion times more powerful than in 2024.

- 2045: Revolution in the battle against climate change — advances in cultured meat will be such that millions of acres of land currently used for grazing animals will be freed up. Billion of tress will be planted on this land and the option to reverse climate change then becomes viable, but is this breakthrough in time?

- 2049: Projected date for AI writing a best seller.

- When Will AI Exceed Human Performance? Evidence from AI Experts

- 2054: Projected date for Robot surgeons.

- When Will AI Exceed Human Performance? Evidence from AI Experts

Living in the Age of the Jerk: Technology innovation is changing everything, but will it create dystopia or utopia? Join the debate, will be available in 2020