The BBC Archive contains material dating the back to the 1880s, preceding the formation of the Corporation itself.

Created in recognition of the intellectual and cultural value in BBC public service programming, it preserves the BBC’s content as a cultural record and for the benefit of future generations.

The UK government recently set out a proposal for increased archive access, agreeing with BBC that the Archive represents a valuable resource for the general public and academia.

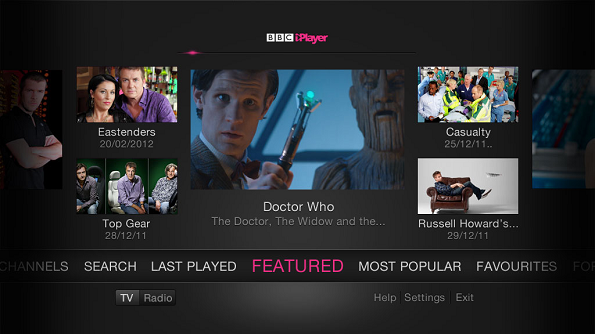

The BBC Rewind project was born of a converged editorial and engineering team originating at BBC Northern Ireland, liberating archived content for public access prototypes and continued use in production. It also focuses on using smart data management technologies to improve the way the Archive can be searched and content discovered.

The primary aim has been to connect the various disparate systems that have been built and acquired over the years, and make archived content searchable from one single place.

The more content we can unlock, the greater the chance of finding relevant material to put online for people to enjoy once more.

>See also: VPNs to undermine BBC iPlayer service?

When we do use archived content in our storytelling, the response is incredible — not only in numbers of hits but also stickiness. Audiences are more likely to watch entire videos than with other content.

Historically, the Archive was managed manually — firstly by storing physical recordings on reel or tape. Parts of it have been through a series of format transfers as old storage and playback methods become obsolete.

The BBC has invested significantly each time to keep the Archive preserved and viable, this latest wave of innovation no exception.

Rewind has been able to build on the perennial efforts of our colleagues in Archives, improving access to assets on the largest scale in our history.

The Archive grows constantly. Our focus has altered to allow large volumes of data to be managed and searched at scale with accuracy and speed.

An open approach

The BBC has always embraced open-source technologies for their rapid innovation and cost-efficiency.

We work with Elasticsearch for its ability to process data at speed and scale. It has great flexibility with wrestling multiple data sources into a single easily queryable stack while retaining the original data.

We started working with the Elastic Stack a couple of years ago with the initial aim of building a performant prototype for testing out linking different data sources and testing user journeys.

Now we’ve moved into production, our methodology has become more sophisticated as the technology has evolved. Recently, we have diversified the way that we use Elasticsearch to provide a faster, more contextual and relevant search result.

Speech to text search was the first key element we implemented in partnership with our R&D department. It matches and converts spoken word to text which, whilst not 100% accurate, is a great way to provide temporal metadata — searches take you to the precise point in a programme where a term is spoken.

This speeds up the searching process dramatically and is ranked to increase relevance, which complements existing human-generated metadata and reduces multi-day workflows to a matter of hours.

We’re looking to test other enrichment tools including visual recognition of people, locations and objects, curious how they could benefit production workflows. There’s more work to be done on the audio side, too, speaker recognition and expansion into non-English languages for international content.

These tools are useful at scale but nothing equals the value of human tagging. We use a three-pronged approach to tackle this. Due to the scalability and performance of Elasticsearch, we can index all metadata about a programme. We then give human-generated metadata a greater search ranking score. Certain metadata objects — the title of a programme and its audience facing description — attract a higher rank, too.

Secondly, we use a confidence score to understand how much we should trust each piece of data. We’re planning to use abductive reasoning — cross-referencing data and confidence scores from multiple tools to try give a more accurate result. This becomes a multidimensional problem and the scalability of Elasticsearch is key in being able to achieve this.

Third is the use of context to improve accuracy. If we have data from speech to text showing the word ‘bear’ is spoken a number of times, it’s difficult to identify what type of bear we’re referring to. If we then use data from human cataloguing that shows the programme was set in the Arctic Basin, we can deduce, with high probability, it’s a polar bear.

The data is now multifaceted and multidimensional. We can pull multiple data points — gathered from disparate sources and viewed through different lenses — to achieve a high level of accuracy and relevance.

Users looking for documentaries containing polar bears in Asia can now traverse the data set from three perspectives, dramatically increasing confidence in the quality of the result. No optimisation needed — queries that used to take seconds now take less than a millisecond.

Confidence score

When combining speech-to-text, visual and location recognition, results are of such relevance that users can be confident of its quality. Searching is quicker and more successful. What’s also interesting is how the platform can turn up more unusual, but still relevant results.

Historically, databases were optimised to ensure we worked to correct processes and indexes — guaranteeing an accurate result. When loading the same data set into Elasticsearch, we can be less rigid in our approach and not have everything defined up-front. It also allows us to be very inefficient with initial queries, trying out new ways of cross-referencing data without the need for re-index. We can try out new searches and make connections where, initially, there may be none.

>See also: How Auntie is opening its vaults for the download generation

Take Edinburgh Festival as an example: celebrating its 70th anniversary, we had to find content spanning 70 years. The festival’s name has changed several times since 1947, so the challenge was how to connect related and unrelated data to generate a representative result.

We used Elasticsearch to connect words with varying distance between them (within five or ten words of Edinburgh and Festival to account for name changes). We got back results that were ranked in a more useful manner than straight co-occurrence of two terms. This on a stack with no custom configuration or optimisation, running on modest hardware.

Exposing the archive in a targeted fashion has prompted 26 million audience engagements in the last nine months alone. As we look to the future data, and using the insight within, this will remain a key part of the BBC’s strategy.

On the editorial side, we’re looking at how the Archive can benefit the BBC for the long term — how production teams can use it in new and creative ways. For users we’re looking forward to liberating even more content for everyone to enjoy.