When a scientist at CERN came up with an idea for a new protocol for supporting communication over a medium called the internet, which at that time was already approaching 30 years of age, he didn’t for one moment consider the possibility of echo chambers.

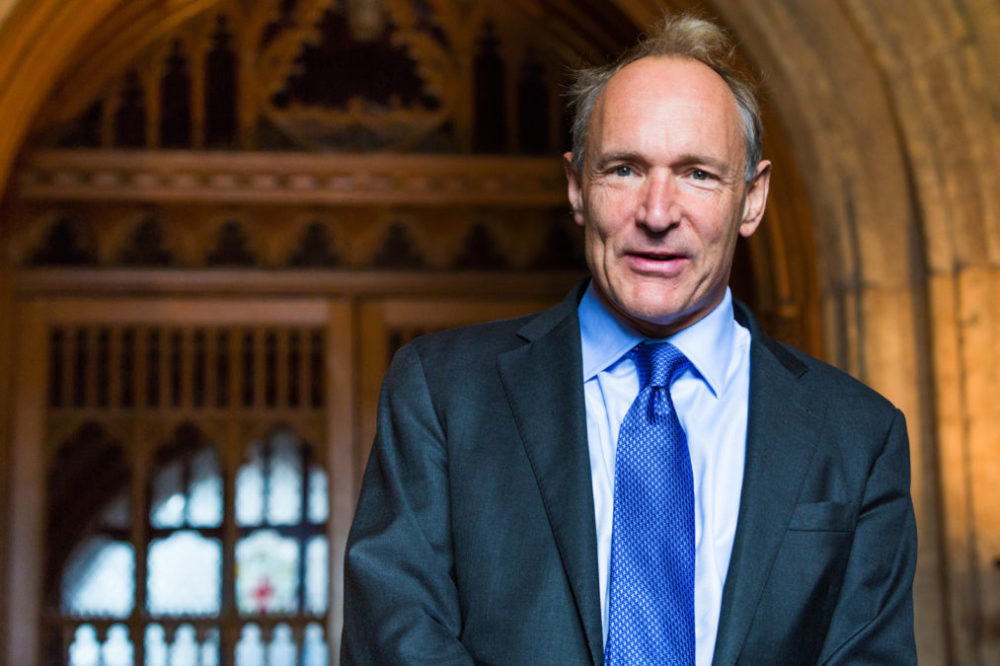

Tim Berners-Lee not only created HTML and thus the World Wide Web, he also played a starring role in the opening ceremony of the London 2012 Olympics. That was the games when Usain Bolt continued to dazzle us, and the opening ceremony where James Bond and the Queen apparently parachuted their way into the Olympic Stadium. In a way, the World Wide Web, which we are told is now 30 years of age — represents a bigger miracle than one in which the Queen and James Bond parachute for real.

Who could have dreamed, surely not Sir Tim, that one day we would carry around with us in our pockets or handbags, a device that gives access to the greatest library that has ever existed, in just seconds.

Now we dream of augmented reality devices, maybe glasses, or contact lenses, or even chips in our head, in which, via 5G connection to a cloud run by quantum computers, we can access AI via the filter of the internet in a split second.

And yet, in this era where we should all have seer like understanding, fake news dominates conversation, populism, often using as its fuel, at best distortions of the truth, at worse blatant lies, threatens to turn government into chaos.

The internet that made the Arab spring possible is now blamed for spreading hate and terror.

Facebook stands accused of allowing third parties to manipulate data in a way that threatens democracy, US presidential hopeful Elizabeth Warren calls for the breakup up of Facebook, Alphabet and Amazon, and within the EU, moves are afoot to slap taxes on tech companies.

In a recent Gartner survey, asking people to imagine the world in 2035, one person predicted the rise of privacy vacations — two weeks away from prying eyes, Big Brother banished for the duration of our holiday, staying in a room which is most certainly not labelled room 101.

Gartner on futurology and the year 2035: Technologists can be pragmatic about futurism, but there is a need for us all to speak up

Some futurologists envision a future where some people shut themselves off from the rest of the world, tending fields with a plough, their thoughts their own, an analogue existence in a digital world. Others imagine company boards, in fear of cybercriminals, resorting to pen, paper and manual typewriters, as a way to record confidential discussions and ideas.

Wikipedia says that the World Wide Web heralded the Information Age, whether it was talking about us, or something more generic is unclear, but the team at Information Age suspects it was thinking with a generic hat on.

The real problem with the World Wide Web is not the protocol created by Tim Berners-Lee, it is us — a species of cavemen dressed in suits and living in houses with central heating and air conditioning

What we can say is that the internet faces challenges — whether those challenges comes from Luddites, latter day King Canutes, or somewhere/someone else, is not certain. But it is surely certain that you can’t turn the clock back. You can’t un-invent the World Wide Web, make its 30th birthday its last, anymore than we can all go back to hunting and gathering for a living. With the world’s population at 7.5 billion and growing, the only way to feed and sustain such numbers is by technological innovation, and no medium in history comes close to the World Wide Web in supporting the exchange of ideas which feeds innovation.

Companies that attempt to practice analogue techniques out of a fear of technology, will simply provide opportunities for corporate undertakers to practice their art.

Happy birthday Google — today it’s one of the world’s largest companies, in the early days, serendipity played a vital role

The real problem with the World Wide Web is not the protocol created by Tim Berners-Lee, it is us — a species of cavemen dressed in suits and living in houses with central heating and air conditioning.

According to the anthropologist Robin Dunbar, 150 (or 148 to be precise) is the cognitive limit to how many people we can have meaningful interaction with. How many of us have hundreds of friends on Facebook, connections on LinkedIn, and followers on Twitter? How many people do you meet? How many people, both fictional and real, who you see on TV do you feel you know?

We are designed to live in groups, that is why there is such a thing as groupthink. We are designed to be on our feet most of the time. We are not designed to eat sugar every day, we are not designed to live in an age of plenty and we are certainly not designed to have permanent connection to the hive of collective intelligence we call the World Wide Web.

As the likes of Daniel Kahneman have shown, we are not rational, maybe we are when we are doing what is natural, but since we invented agriculture, let alone the World Wide Web, we have not lived naturally, and our irrationality is pervasive and sometimes poisonous.

The only possible answer lies in education — not learning multiplication or grammar or books by Shakespeare, not that these things are not important — but learning how to embrace technology, using it as an aid, which we control, rather than our master, who we must obey.

The World Wide Web is 30, and it should have made us wiser, more understanding and tolerant. Instead it feels more like Pandora’s box, which let all the evils into the world. But Pandora’s box also contained hope, and we must all hope, technologists taking the lead, that we can tame the World Wide Web, like we once tamed dogs and horses, and be its’ master. By using education to create a kind of digital enlightenment, we can turn that hope into reality.