IT operations in the enterprise faces unprecedented challenges today including meeting the following needs:

- Be more cost-effective (spend less money).

- Be more agile and responsive to the business.

- Deliver services with high-quality and availability.

- Support “digitisation” initiatives designed to get more business functionality online more quickly and to enhance that functionality more frequently.

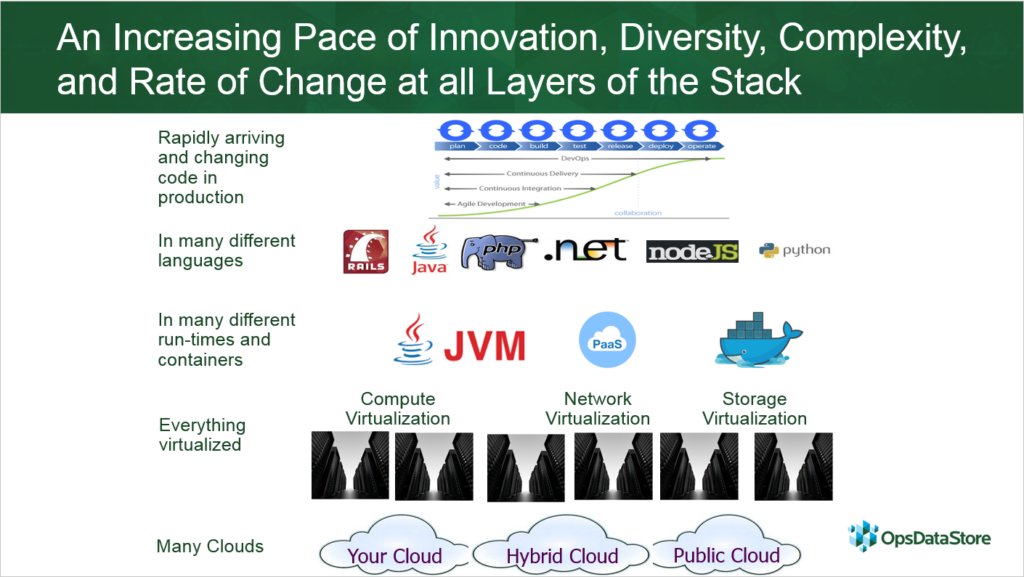

- Cope with diversity in the “IT stack” and increasing diversity in that stack due to the ever increasing pace of innovation in IT.

- Deal with virtualisation at every layer of the stack including compute virtualisation, network virtualisation, storage virtualisation and the virtualisation of the entire IT environment through various cloud offerings.

Is it any wonder then that the common joke for what CIO stands for is “career is over”?’’

The ever increasing pace of innovation, diversity and automation

Twenty years ago, IBM, BMC, HP and CA promised enterprise customers that their suites of software and theirs alone could help those customers manage the reliability, performance, compliance, and service quality of their enterprise applications.

>See also: Bridging the operational technology and Internet of Things divide

This was called “business service management” and it was a miserable failure. It failed not because IBM, BMC, HP and CA were bad vendors, or because they employed bad people, but because even back in 1997 the pace of innovation was too high for any single vendor to be able to keep up.

Today the pace of innovation, the degree of diversity and the level of automation is much higher as depicted by the diagram below.

The replacement for the frameworks

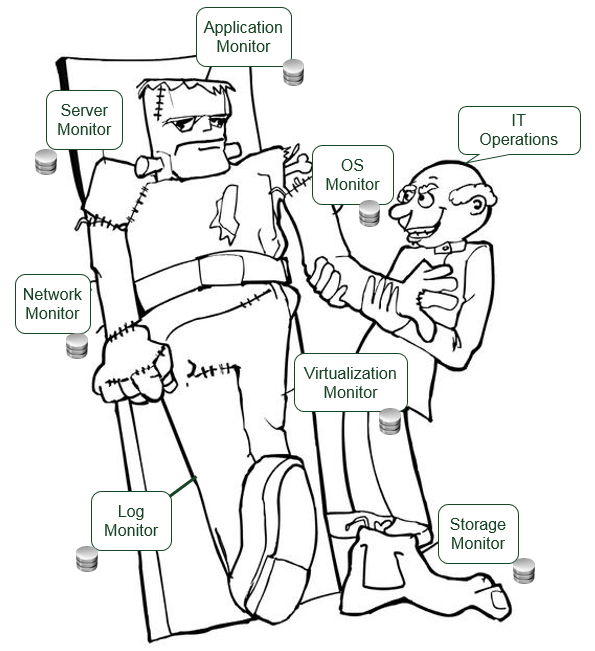

So if single vendor solutions to the management of enterprise environments have failed, what has replaced them? Well, the answer is a large number of best of breed solutions each of which do a great job of solving their target problem for their target audience as depicted below.

But, best of breed monitoring and management tools created a whole new problem. That problem is that with each tool comes its own database which leads to the fragmentation of management data across those N products. The fragmentation of the data leads to the Franken-Monitor shown below.

Data-driven IT operations – the only viable solution

The business has been making data driven decisions for the last few years. In fact, IT has built most of the data lakes for the enterprise that has enable data driven decision on the business side.

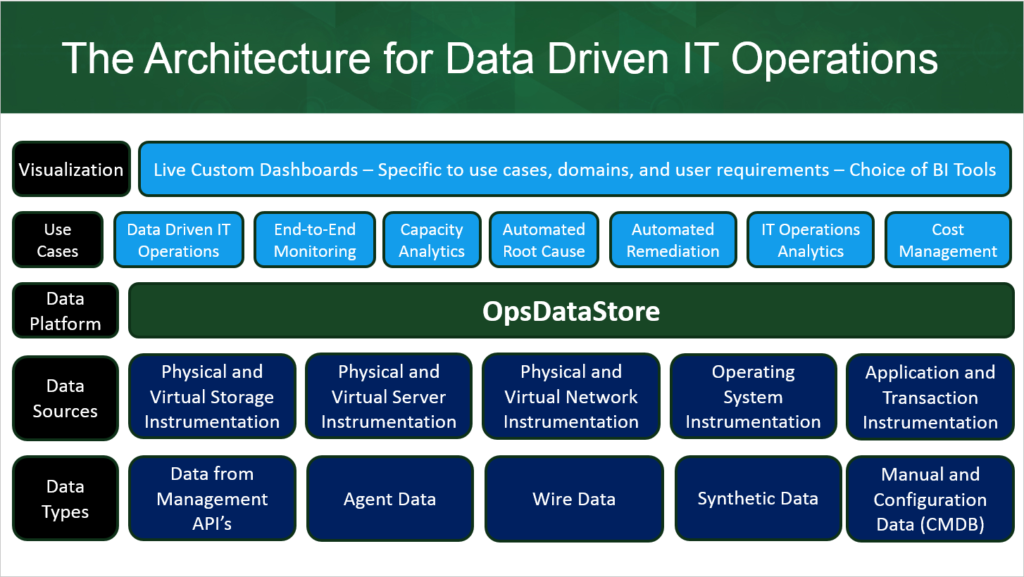

But, IT has not taken advantage of big data to run its own operations for the simple reason that IT runs in real time and not in batch windows. When something goes wrong in IT (a transaction is slow) it needs to be fixed right away, not in 24 or 48 hours. So, IT Operations is a real time big data problem, which needs a real time big data architecture to cope with the problem.

>See also: 5 reasons why IT service management is essential

Below is an example of the approach that can be taken to solve this problem. This approach brings two sorely lacking things to IT Operations – an architecture that describes how various sorts of IT Operations data and their sources fit together, and the idea of a real time big data backend which can both keep up with these streams of data, make it useful, and available in a high performance query optimised data store.

A simple log store is not enough

Many enterprises have made substantial investments in log management solutions that collect logs from various layers of the stack, normalise them around their time stamp and make the resulting log database easily query-able.

Applying big data principles to logs is a great first step but it is not enough. The problems with log stores are:

- The only variable upon which logs are organised is their time stamp.

- There is therefore no inherent understanding of the relationships between logs in the log store.

- It is up to the user of the log store to query the log store and to know exactly what the user is looking for.

- Hence the log store is an inadequate solution for IT operations and applications performance management.

Relationships are the key missing piece

Given the above, the key missing piece is an understanding of what relates to what over time. This has been one of the most difficult problems in the industry to resolve over the last 30 years.

CMDB’s have failed to be able to keep up with the pace of change. Application discovery and dependency mapping is an after the fact process that has been unable to keep up with the changes.

In a world of constant releases of new software into production, and constant changes in the environment due to automation, CMDB’s and ADDM are legacy technologies and approaches.

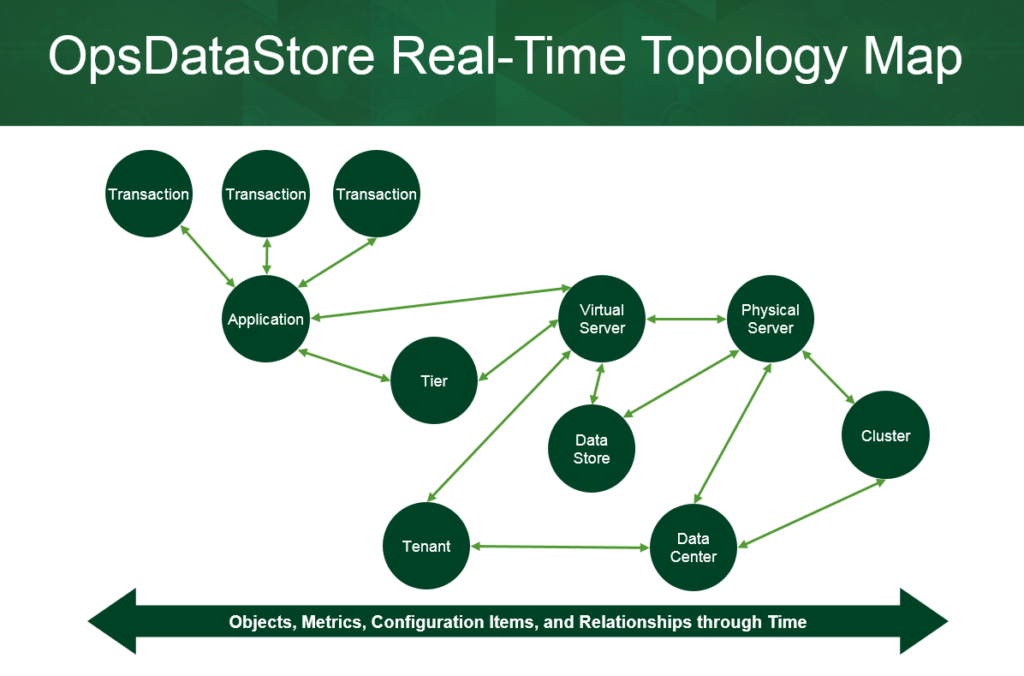

So what can keep up? Only an approach that determines the relationships through the stack as they are created in real time as shown below.

>See also: The cloud and its security implications

Contrast a query into a log store with a query into a relationship store. If you are trying to find out the chain of resources that support a transaction, when you query a log store it is up to the person issuing the query to know and to include in that query the identifier of every object (application, tier, virtual server, physical server and datastore) in the query itself.

In other words the user of the log store has to know everything about where that query is running in order to get the results.

Contrast this with a user of an intelligent store of related metrics. In this case all the user has to do is write the ID of the transaction into the query along with the time range.

What is returned is the entire topology for the objects that support that transaction, as well as all of the metrics associated with the behaviour of those objects including the response times of the transactions and the resource utilisation of the infrastructure.

Summary

Businesses are reaping benefits from big data and data driven decisions driving improvements in revenues, costs, profits, growth and market share.

Ironically IT built the big data systems that the business relies upon for these benefits, but has failed to capitalise upon big data to improve its own operations.

>See also: Outsourcing your IT? Look at process before people, tools and price

IT operations needs to embrace data-driven decisions enabled by the right architecture and sources of metrics in order to deliver the agility, service quality, and cost profile required by the business.

Sourced by Bernd Harzog, CEO of OpsDataStore