Guido Meardi, CEO and co-founder of V-Nova, discusses how metaverse stakeholders can accommodate the pending influx of data to drive value

Microsoft’s recent acquisition of online gaming giant Activision Blizzard, viewed by analysts as a $75 billion land grab deal to bring existing virtual worlds even deeper into 3D digital environments, has yet again brought the metaverse into sharp focus. And with the United Arab Emirates launching the world’s first metaverse customer service centre to offer healthcare services virtually, the buzz around how this new virtual space will impact every aspect of our lives is at an all-time high.

But as the ideas for virtual reality (VR) and the metaverse become bigger and brighter, so do the requirements necessary to facilitate it. The volumes of data needed to deliver an immersive metaverse are enormous, and only set to grow as devices continue to be developed to satisfy appetites for evermore photo-realistic and interactive experiences. Businesses wishing to take advantage of the opportunities in the metaverse must therefore consider how to overcome the challenges that arise with increased data streaming.

The challenges to make the metaverse feasible at scale

For the metaverse to work, the following challenges must be addressed:

- Volumetric objects must be suitably compressed and streamed in real-time to the rendering device — This requires suitable lossy coding technologies to efficiently compress/decompress and stream types of data such as meshes, textures, point clouds, etc., that so far has only been losslessly “zipped” for monolithic once-in-a-while downloads.

- The network backbone and CDNs must be able to reliably stream volumetric worlds — Although some simple experiences will certainly require less, it is unlikely that truly photorealistic 6DoF volumetric experiences will be feasible with less than 100 Mbps of data transfer.

- Volumetric decoding and low-latency video encoding must be fast enough — This is to enable the rendering device to real-time decode received volumetric data, process tracking data from the XR user device, render the scene at sufficient resolution and encode an ultra-low-latency video feed at that resolution and frame rate.

- The network connection between rendering device and XR display device must have sufficient bandwidth — This is to reliably relay the high-resolution video feed. Most wireless connections start having reliability issues beyond 30-50 Mbps.

- The XR display device must encode sensor data of what it sees, plus decode the high-resolution video feed, all at very low power — This needs to be low enough for the silicon chip and its associated solid-state battery to be extremely small.

Better experiences, more data

For the metaverse to succeed and provide seamless experiences, it will need technology to make those enormous volumes of data (both volumetric streaming and ultra-low-latency video casting) manageable in real-time with reasonable devices. Putting down more fibre cables and building new data centres will not magically solve the problem.

Additionally, the International Energy Agency found that data centres alone consume around 200 terawatt-hours (TWh) of electricity, and are responsible for approximately 2% of greenhouse gas emissions. There needs to be consideration for low-processing-complexity approaches able to fulfil the metaverse vision without either breaking the bank or endangering our planet further.

High quality VR offering with data compression

One of the foundational pillars to enable the metaverse is more efficient and less energy-hungry data compression. As XR technologies advance and become more mainstream, the metaverse needs to accommodate higher resolution displays and higher streaming quality, for both video feeds and volumetric objects, to allow its users to completely immerse themselves.

By reducing the mammoth file sizes needed, businesses can conserve storage capacity and power, and minimise the need to expand their infrastructure to cope. They can also effectively manage the growing volumes of data from XR devices without compromising on viewer quality.

The low-complexity coding enhancement standard, MPEG-5 LCEVC (LCEVC), is an example of technology ideally suited to metaverse applications. It allows highly efficient compression of low-latency video feeds, making higher quality streaming in the new XR reality possible and mass adoption more feasible. LCEVC also offers various multi-layering features which are ideal to video streaming and rendering within a complex 3D space, swiftly displaying and updating the image pixels without any apparent lag for the user.

With reduced file sizes and efficient real-time data decompression (also enabling 8K stereoscopic high-framerate decompression with low-power ASICs amenable for devices such as XR eyeglasses), the original rendered quality can remain intact, immersing the user into captivating and realistic digital experiences.

LCEVC also produces lower bandwidth demands in comparison to non-enhanced video coding, crucially meeting key bandwidth threshold. Low complexity means that LCEVC-powered videos can be encoded and decoded in real-time at high enough quality and low-enough power.

Another multi-layer coding standard, SMPTE ST 2117 VC-6 (VC-6), shows promise for the metaverse, especially in relation with lossy and lossless coding of non-video data such as point clouds, meshes, depth maps, textures, atlases, etc. An evolution of VC-6 is the ability for point-cloud compression, enabling significant amounts of data to be compressed into a manageable asset.

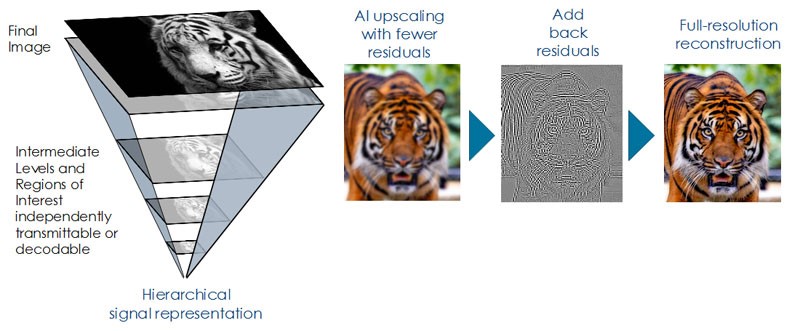

The multi-layer coding approach of VC-6 allows a hierarchical signal representation of S-trees with several layers of so-called “residuals”, which can be efficiently coded in parallel via directional transforms, also allowing for progressive and/or Region-of-Interest transmission and decoding.

Importantly to metaverse bots, hierarchical multi-layer signal formats also make AI technologies faster and more accurate at classification tasks, since they are consistent with the natively hierarchical structure of information. In practice, detection can be performed by processing a lower quality rendition of the signal, and then performing region-of-interest high-resolution decoding only for the portions that are of specific interest given the output of detection.

Delivering the future

New XR milestones are being announced every month, such as the first metaverse wedding in India and the first lecture in the metaverse at Queen Mary University of London. As such, many organisations are embracing the challenge of delivering this next-gen user interface, targeting tangible benefits for their bottom lines and customer relationships.

However, the ever-growing volume of data that is required to power the XR meta-universe is a challenge that needs to be addressed first, requiring a solution that doesn’t put a strain on the environment or compromise on visual quality. Whilst there won’t be one answer to this complex issue, novel data compression technologies and standards such as MPEG-5 LCEVC will be pivotal in enabling the metaverse to reach its full potential.

Related:

We need to talk about metaverse security — David Mahdi, chief strategy officer and CISO advisor at Sectigo, discusses what organisations operating the metaverse must consider when it comes to its security.

What makes a real-time enterprise? — Real-time analytical capabilities are an increasingly common focus for enterprises adopting modern analytics solutions, but it’s an end-goal that requires careful evaluation of your business needs to realise successfully.