The history of AI

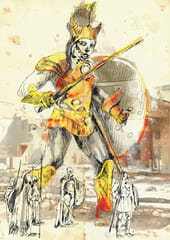

BC: Talos:

The history of AI begins with a myth and just as with many modern AI systems, it concerned defence. According to Greek mythology, Talos was a giant automaton made of bronze, created by the god Hephaestus, for the purpose of guarding the island of Crete by throwing stones at passing ships.

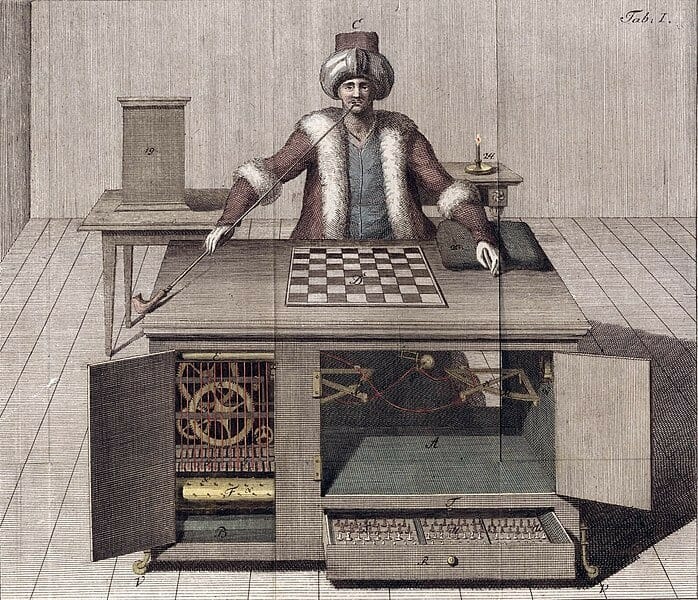

1769: The Turk

Just as many experts in AI today accuse companies of claiming to have AI when in fact they don’t, the second well known example of AI was a lie. The Turk was supposed to be a mechanical device for playing chess, created by Wolfgang von Kempelen, trying to impress Empress Maria Theresa of Austria in 1769. The Turk even played against Napoleon Bonaparte and won. But in fact it was a hoax. A human chess master sat inside the machine, controlling it.

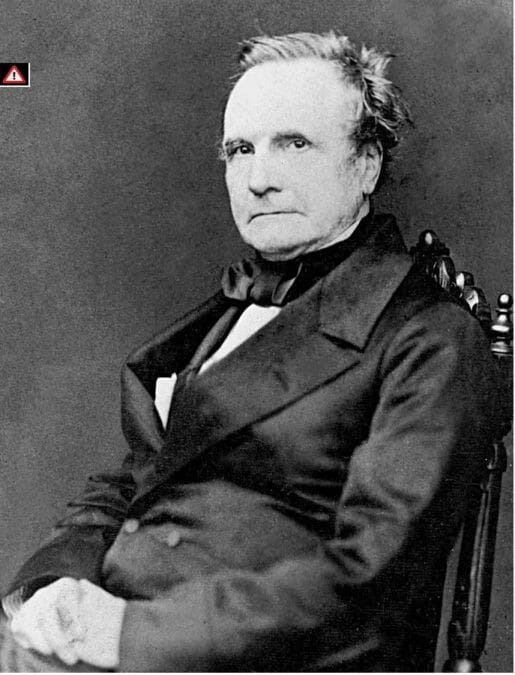

1830s: Babbage computer

In the 1830s, Charles Babbage created a design for the Analytical Engine. The product was finally built 153 years later. It wasn’t a design for an AI machine, of course, but today is considered to be the forerunner of the digital computer.

What is AI? A simple guide to machine learning, neural networks, deep learning and random forests!

1943: McCulloch and Pitts produce a model describing the neuron.

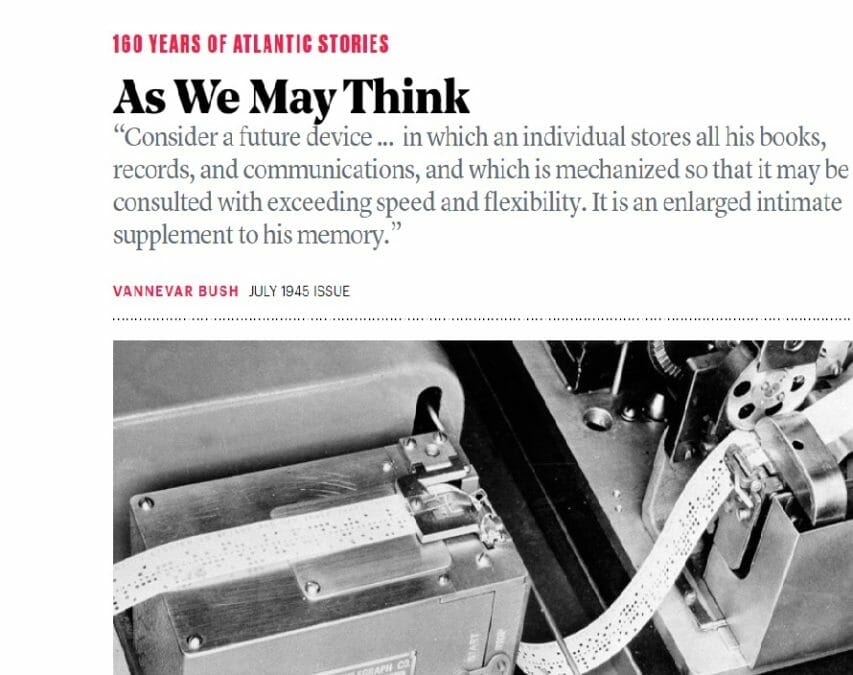

1945: The history of AI begins with an essay

In this year, Vannevar Bush wrote an essay, published in The Atlantic, entitled ‘As we may think’. The idea was for a machine that holds a kind of collective memory, that provided knowledge. Bush believed that the big hope for humanity lied in supporting greater knowledge, rather than information.

1947: The transistor

John Bardeen and Walter Brattain, with support from colleague William Shockley,

demonstrate the transistor at Bell Laboratories in Murray Hill, New Jersey.

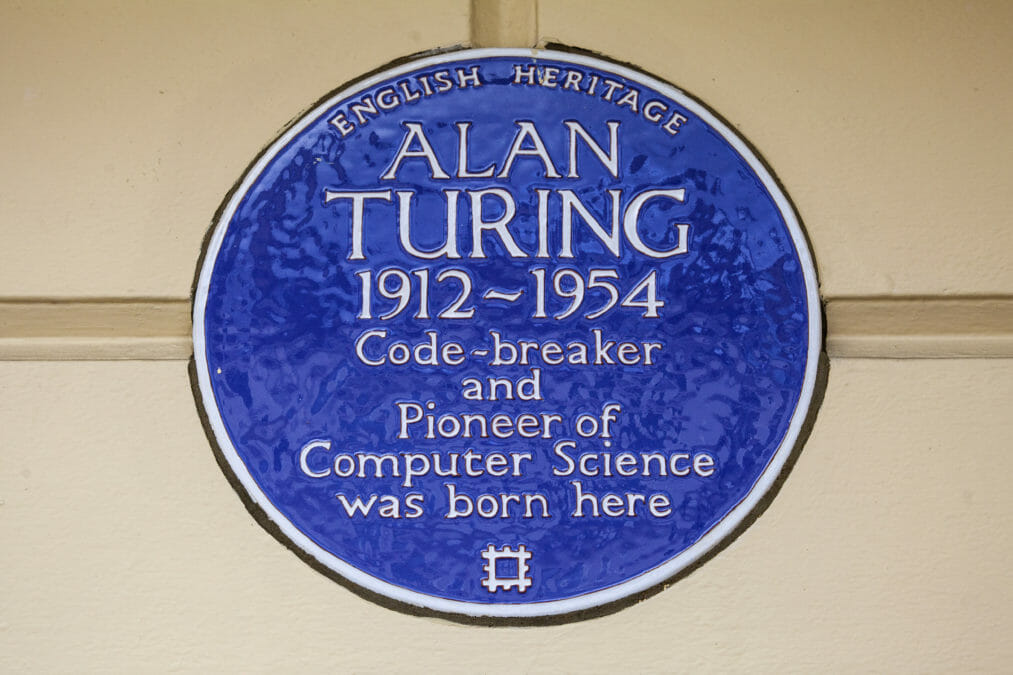

1950: The Turing Test

Alan Turing proposes a test for ascertaining whether a computer can think like a human. In that year he published a paper: ‘Computing Machinery and Intelligence.’ Turing test was based on the imitation game in which a judge questions a human and a computer in different rooms, communicating by keyboard and monitor. The judge has to identify which of the two is human. This has become known as ‘The Turing Test.’

Is artificial general intelligence possible? If so, when?

1951: Marvin Minsky makes his first mark of many

Marvin Minsky builds the first randomly wired neural network learning machine. Later in life, Minsky’s whose contributions to AI are legion, said: “No computer has ever been designed that is ever aware of what it’s doing; but most of the time, we aren’t either.”

1956, the word artificial intelligence is first used

1956: John McCarthy presented at a conference held at Dartmouth College, which he organised, when he coined the phrase Artificial Intelligence. Also at the conference were Marvin Minsky, Nathaniel Rochester, and Claude Shannon.

1958: The book was written

John von Neumann’s book, The Computer and the Brain was published.

1959: Machine Learning

Arthur Samuel coined the phrase machine learning.

1965, Moore’s Law

Gordon Moore, who went on to be a co-founder of Intel, says that the number of transistors on a silicon circuit double every two years — Moore’s Law evolved out of this, suggesting computers double in speed every 18-months.

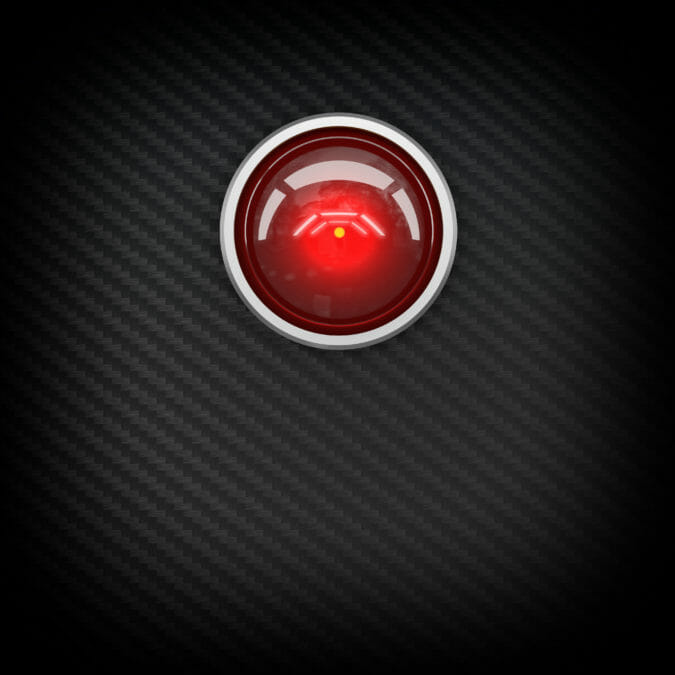

1968: Hal

1968: Stanley Kubrick releases 2001 a Space Odyssey, based on the novel by Arthur C Clarke. The movie is perhaps most famous for the scenes involving HAL, an apparently self-aware computer.

1975: The history of AI takes a turn to neural networks

The first multi layered unsupervised computer network — the beginning of neural networks.

1982: Fifth Generation neural networks concept

At a US-Japan conference on Neural Networks, Japan announced the Fifth Generation neural networks concept.

1984, “winter is coming” for AI, or so it was warned

At the American Association of Artificial Intelligence, Roger Schank and Marvin Minsky warned that AI had become over-hyped and that this would lead to disappointment and an AI winter.

1984 The Terminator

James Cameron’s movie the Terminator is released, maybe it did for the public perception of AI what Jaws did for sharks.

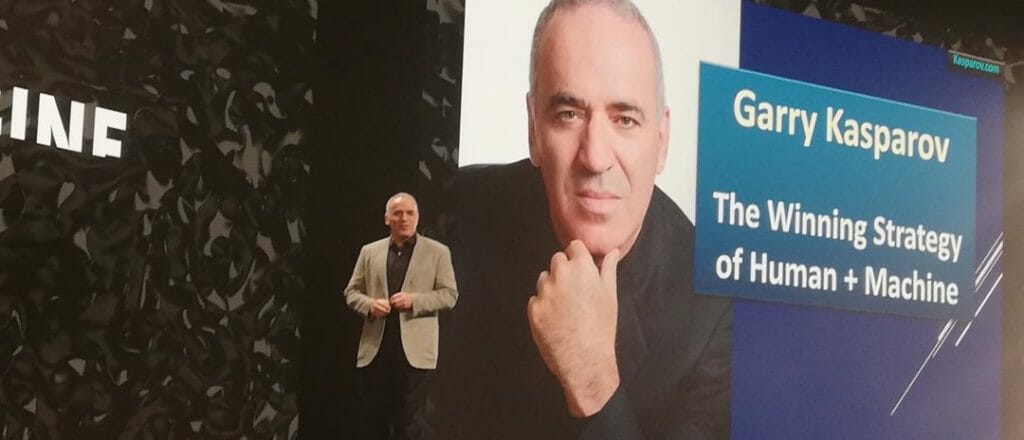

1997: Chess victory to the computer

IBM’s Deep Blue defeated Gary Kasparov at chess. Today, Kasparov says the “computer that defeated me at chess is no more intelligent than an alarm clock.” The ability to defeat a human ceased to be a criteria for defining AI.

Kasparov and AI: the gulf between perception and reality

2009: The big bang

The big bang of neural networks: Nvidia, a hardware company that originally specialised in technology for video games, trained its GPUs in neural networks. At around that time, Andrew Ng, worked out that using GPUs in a neural network could increase the speed of deep learning algorithms 1,000 fold.

2010: Deep Mind began

Deep Mind is founded by Demis Hassabis, Shane Legg and Mustafa Suleyman.

2011: IBM strikes back

IBM Watson wins Jeopardy, defeating legendary champions Brad Rutter and Jen Jennings.

2013: Natural Language Processing

Neural networks adapted for natural language processing.

2015 to 201i, the rise of AlphaZero,

In 2015, DeepMind’s AlphaGo defeated the European champion of the Chinese game of Go. 2017: Within three days of being switched on, AlphaGo Zero can defeat the previous version at Go with just the instructions as a guide, within 40 days it arguably became the greatest Go player ever, rediscovering lost strategies.

Some predictions, courtesy of ‘When Will AI Exceed Human Performance? Evidence from AI Experts’:

2027: Projected date for automated truck drivers.

2049: Projected date for AI writing a best seller.

2054: Projected date for Robot Surgeons.

2060: Average projected date for when AI can do everything that humans can do.

Random Forests and what do they mean for you?

Related articles

Are there solutions to the AI threats facing businesses?

What is AI? A simple guide to machine learning, neural networks, deep learning and random forests!

If you want to see the benefits of AI, forget moonshots and think boring

AI predictions: how AI is transforming five key industries

Darktrace unveils the Cyber AI Analyst: a faster response to threats

Is the cloud and AI becoming two sides of the same coin?