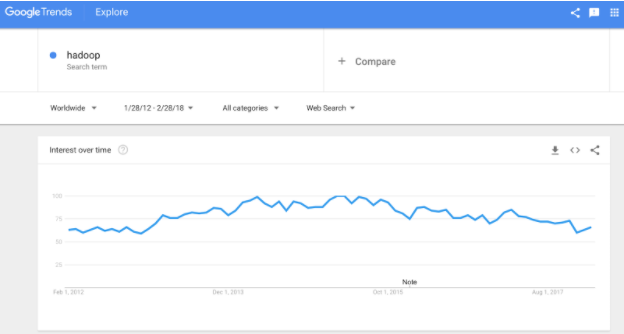

The past 15 years have seen tremendous developments and enterprise adoption in the world of big data and analytics. Starting with Hadoop at early adopters like Yahoo! and Facebook, this platform provided a robust and scalable starting point for these modern big data initiatives. Although the term didn’t exist at the time, these companies were among the first to build and manage Hadoop-based data lakes.

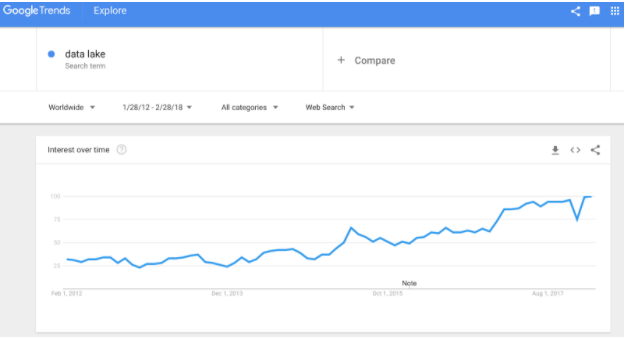

Fast forward to 2018 and the term “data lake” has become a pervasive component of virtually every enterprise data architecture. However, the nature of the data lake has changed significantly over the past few years – and the emergence of cloud has changed the way enterprises look at data lakes.

>See also: Hadoop: the rise of the modern data lake platform

Hadoop created data lake 1.0

The emergence of Hadoop and its ecosystem components over the past 10 years have paved the way for what we have come to know as the modern data lake. The adoption of Hadoop as the platform for this data lake has been in large part driven by several key characteristics:

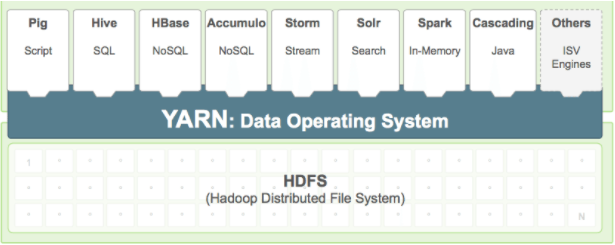

• Distributed robust scale-out storage: At the core of Hadoop is the Hadoop Distributed File System, or HDFS. HDFS fundamentally changed the world of storage by enabling relatively low cost, scale out storage in the enterprise. With HDFS, enterprises were now able to pursue a “store now, derive value later mindset, while before the high cost and limited “scale out” of traditional storage systems constrained this kind of thinking.

• Multiple processing engines: The other essential component of the original Hadoop project was a distributed data processing paradigm called MapReduce. Combined with HDFS, MapReduce provided a scalable approach to processing, analysing, and aggregating the data store in HDFS. Over time, a set of additional purpose built distributing processing engines emerged as part of the Hadoop ecosystem, including Hive, Impala, and Spark. Hadoop became a place where data could be stored once (in HDFS) and then accessed and queried using the right tool for the job using multiple processing engines.

>See also: Purifying the Hadoop data lake and preventing a data swamp

• Shared metadata catalog: The final piece of the Hadoop data lake puzzle was the emergence of the Hive metastore as a shared data catalog. With this shared data catalog in place objects could be seamlessly shared across these multiple processing engines. A batch MapReduce job could write directly to a table that could be immediately queried by Impala without needing to move, re-catalog, or reformat the data.

The three architecture characteristics above have been instrumental in driving the rapid adoption of Hadoop as a data lake platform. However, this adoption has not been without its challenges. Enterprise data architects, big data specialists and data infrastructure teams often voice the following concerns about Hadoop-based data lakes.

Inability to separate compute from storage: When Hadoop was first created, it was critical to have compute and storage colocated on the same machine, as the cost of moving data across the network for processing would have severely hampered performance. As a result, Hadoop clusters needed to be sized to support the the maximum required storage or processing demands – whichever was greatest. In most cases this constraint leads to clusters that either have significant amounts of excess storage, or are significantly underutilised.

>See also: 5 secrets of the data lake revealed for the enterprise

• Difficulty achieving both scale and efficiency: Because the initial set of Hadoop deployments were primarily on-premises, the ability to scale resources up and down based on consumption (as one might a cloud-native service) was inherently limited. As such, today’s on-premises Hadoop data lakes tend to be sized to support the peak anticipated workload (for example a monthly roll up process) – an expensive proposition in many cases.

• Service management and coordination challenges: Although one of the attractive elements of the Hadoop ecosystem is that it consists of a set of loosely coupled services, the reality is that the installation, configuration, management, and coordination of these services are cumbersome tasks. As a result, a few large single-vendor, monolithic platforms have evolved (namely Cloudera and Hortonworks). As a result the promise of this loosely coupled approach has been diminished because of the need for a sophisticated set of management tooling.

The cloud is creating data lake 2.0

As the adoption of cloud-based data services continues to rapidly increase, there has been a subtle shift in the characteristics of the data lake. In many ways, cloud-based data lakes look very similar to their on-premises counterparts:

• Distributed robust scale-out storage: Amazon S3, Microsoft Azure Data Lake Store (ADLS), and Google Cloud Storage provide a scale-out, low-cost and robust storage option for both structured and unstructured data, and provide a number of interfaces (including HDFS) to complementary services.

• Multiple processing engines: The big three cloud vendors make it easy to bring a number of data processing services to the data that is landed on these cloud data lakes. Amazon’s Elastic MapReduce (EMR) is among the most popular cloud-based Hadoop offers; on the traditional database side, Amazon Redshift can easily access and query this same data in S3. Similarly, Microsoft’s HDInsight service makes it simple to consume and process data from the Azure Data Lake Store.

>See also: Opportunity: data lakes offer a ‘360-degree view’ to an organisation

• Shared metadata catalog: A significant development in the cloud data space has been the adoption of the Hive metastore or Hive-compatible catalog services, such as Amazon’s Glue catalog. Microsoft now supports connecting multiple HDInsight or Spark clusters to a single metastore on top of shared data in ADLS. Cloudera’s Altus architecture makes it easier than ever to deploy and manage cloud data services on shared S3 storage.

The cloud data services described above clearly have the characteristics required for a modern data lake, but they avoid some of the pitfalls associated with traditional on-premises data lakes. Specifically:

• Storage and compute services in the cloud can be scaled up and down independently of each other, helped in large part by recent improvements and optimisations in networking, As a result, cloud data lake customers can scale storage without needing to add compute (and vice versa).

• All of the cloud vendors make it very easy to scale-up and scale-down resources, and for these resources to be consumed in a pay-per-use model. This means that resources can easily be added to handle peak loads, and then be scaled back to meet steady-state demands.

>See also: How to create customer-centric data platforms

• The infrastructure management and service coordination challenges inherent with monolithic on-premises solutions are reduced with cloud-based offers – making it easy to link together various services that leverage a shared data catalog and shared data storage substrate.

It is clear that cloud-based storage and data services are well-suited to deliver on the requirements of today’s modern data lake architecture. Additionally, it is fair to expect that the adoption of cloud-based data lakes will continue to grow. With that said – the next challenge of the data lake architecture is driving insights from the massive amounts of data stored in these data lakes.

Sourced by Josh Klahr, VP of Product, AtScale